Node Replicated Kernel?

Node Replicated Kernel (NRK) is a research prototype OS that started as a project at VMware Research, but is now being developed collaboratively by a team of researchers from industry and academia. It is intended as a basis to explore ideas about the structure of operating systems for hardware of the future. NRK is written from scratch, almost all in Rust (with some assembly), and it runs on x86 platforms.

How is NRK different from Linux, Windows, or MacOS?

At present, NRK lacks many of the features of an operating system that make it usable by anyone other than systems researchers taking measurements of the system itself. For example, there is currently no GUI or Shell, and only very limited application support. In fact, it's probably easier to compare NRK in it's current form to a light-weight hypervisor rather than a fully-featured OS.

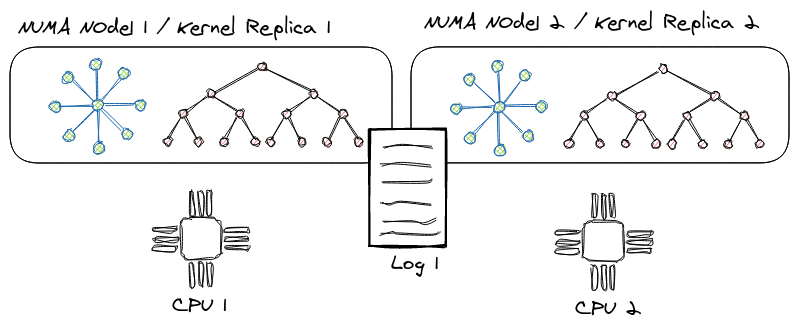

From an architectural point of view, the NRK kernel is a new and practical implementation of a multi-kernel OS:

A multikernel operating system treats a multi-core machine as a network of independent cores, as if it were a distributed system. It does not assume shared memory but rather implements inter-process communications as message-passing.

Unfortunately, such a model also brings unnecessary complexity: For example, the "original" multi-kernel (Barrelfish) relied on per-core communication channels and distributed protocols (2PC, 1PC) to achieve agreement, replication and sharing in the OS.

We overcome this complexity in NRK by using logs: The kernel relies primarily on single-threaded data structures which are automatically replicated in the system. Various operation logs make sure the replicas are always synchronized. Our technique (called node-replication) bears resemblance to state machine replication in distributed systems, and lifts our single-threaded data-structures into linearizable, concurrent structures. Our OSDI'21 paper has more details on scenarios where this approach can heavily outperform the scalability of traditional lock-based or lock-free data-structures.

In user-space, NRK runs applications in ring 3: Each application can be understood as a single isolated process/container/lightweight VM with little opportunity to share state with other processes. To run existing, well-known applications like memcached, LevelDB or Redis, a process can link against rumpkernel, which instantiates and runs the NetBSD kernel and its user-space libraries (libc, libpthread etc.) within the process' address-space and therefore adds decent support for POSIX.

Finally, NRK is written from scratch in Rust: We take advantage of the use of a safe language with a rich type-system for OS implementation, to gain better security and correctness guarantees at compile time, while not impacting performance negatively.

Kernel Architecture

The NRK kernel is a small, light-weight (multi-)kernel that provides a process abstraction with virtual memory, a coarse-grained scheduler, as well as an in-memory file-system.

One key feature of the kernel is how it scales to many cores (and NUMA nodes) by relying on data-structure replication with operation logging. We explain the two main techniques we use for this in the Node Replication and Concurrent Node Replication sections of this chapter.

Node Replication (NR)

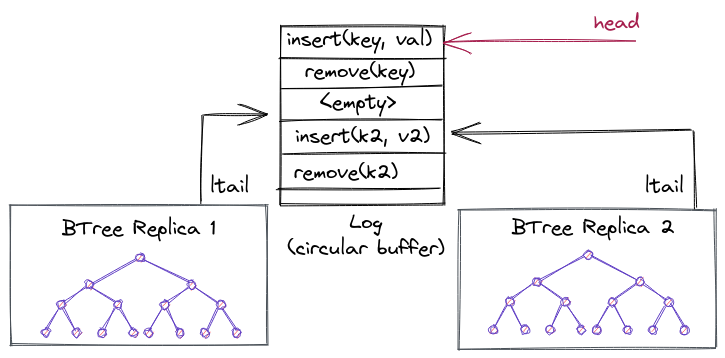

NR creates linearizable NUMA-aware concurrent data structures from black-box sequential data structures. NR replicates the sequential data structure on each NUMA node and uses an operation log to maintain consistency between the replicas. Each replica benefits from read concurrency using a readers-writer lock and from write concurrency using a technique called flat combining. In a nutshell, flat combining batches operations from multiple threads to be executed by a single thread (the combiner). This thread also appends the batched operations to the log; other replicas read the log to update their internal states with the new operations.

Next, we explain in more detail the three main techniques that NR makes use of: operation logs, scalable reader-writer locks and flat combining.

The operation log

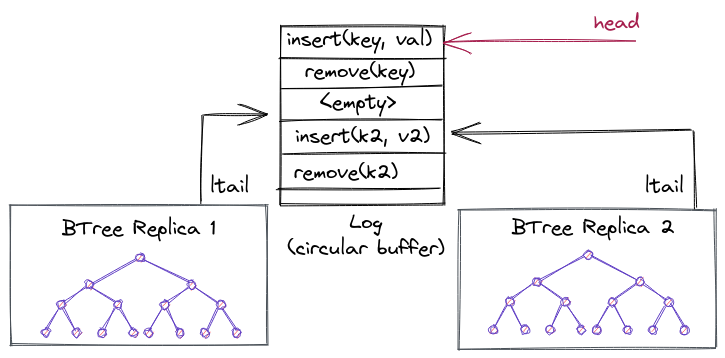

The log uses a circular buffer to represent the abstract state of the concurrent data structure. Each entry in the log represents a mutating operation, and the log ensures a total order. The log tail gives the index to the last operation added to the log.

A replica can lazily consume the log by maintaining a per-replica index into the log, indicating how far from the log the replica has been updated. The log is implemented as a circular buffer, so that entries can be garbage collected and reused. NR cannot garbage collect entries that have not been applied on each replica. This means at least one thread on each NUMA node must be executing operations on the data structure, otherwise the log can fill up and other replicas would not be able to make any more progress.

Next, we're going to explain the log in more detail for the curious reader (Warning: if you're not directly working on this, likely these subsections are pretty boring and can be skipped as well).

Log memory layout

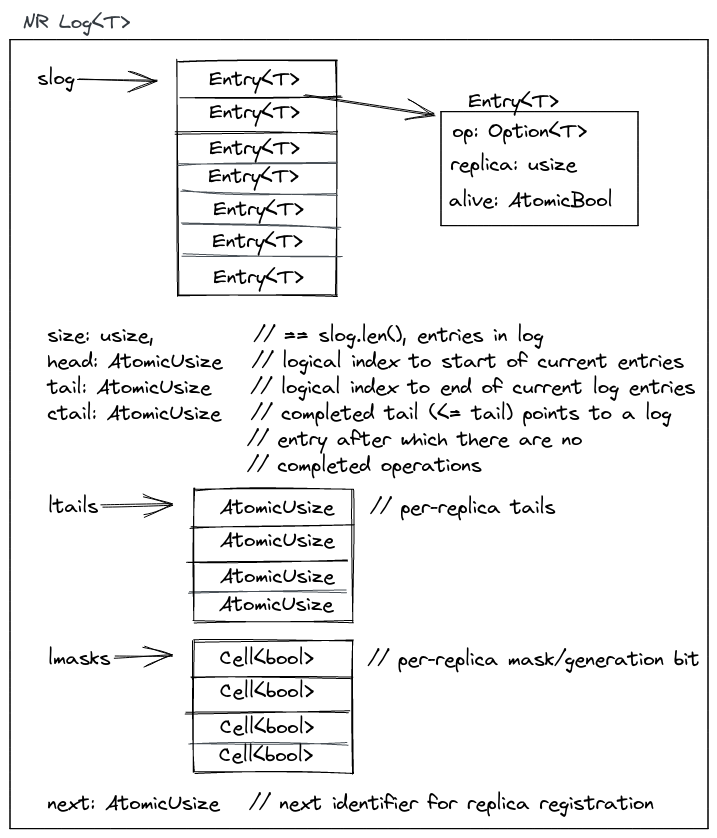

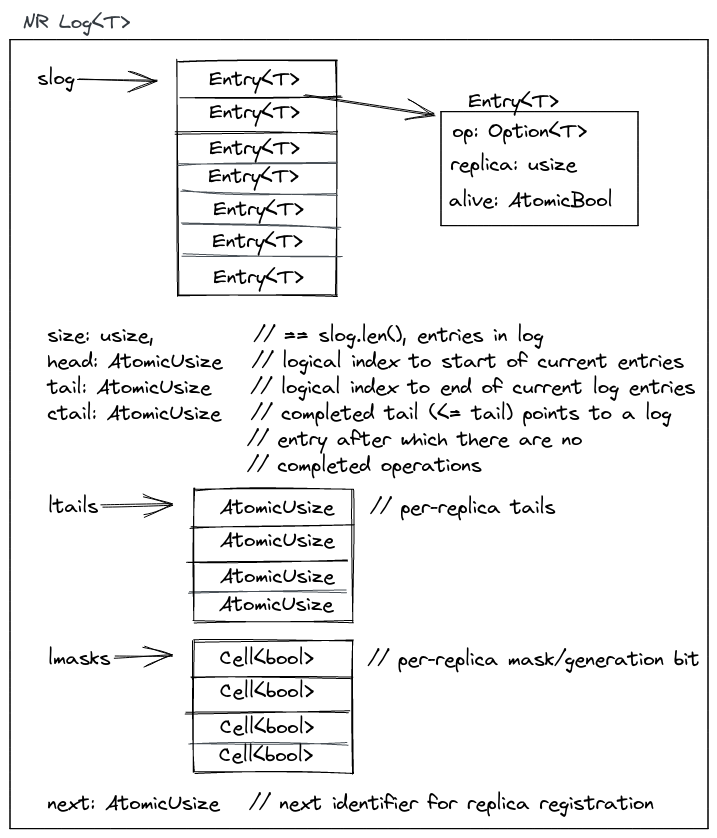

The

log

contains an array or slice of entries, slog, where it stores the mutable

operations from the replicas. It also maintains a global head and tail which

are a subregion in slog, and indicate the current operations in the log that

still need to be consumed by at least one replica or have not been garbage

collected by the log. The head points to the oldest "active" entry that still

needs to be processed, and the tail to the newest. All replicas have their own

ltail which tracks per-replica progress. At any point in time, an ltail will

point somewhere in the subregion given by head..tail. lmasks are generation

bits: they are flipped whenever a replica wraps around in the circular buffer.

next is used internally by the library to hand out a registration tokens for

replicas that register with the log (this is done only in the beginning, during

setup). It tracks how many replicas are consuming from/appending to the log.

The ctail is an optimization for reads and tracks the max of all ltails:

e.g., it points to the log entry (< tail) after which there are no completed

operations on any replica yet.

Consuming from the log

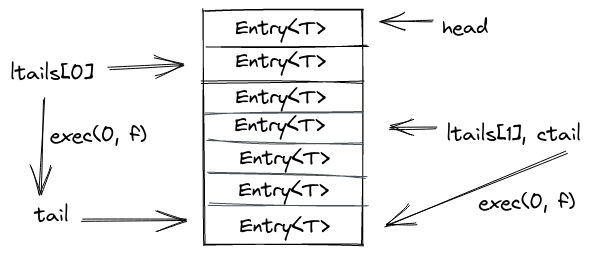

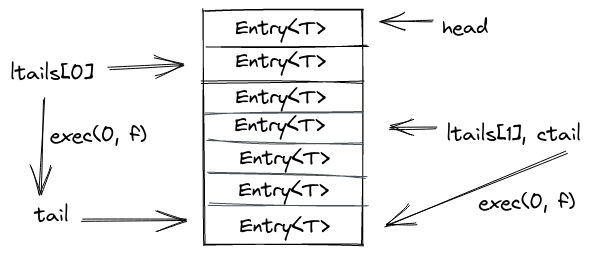

The exec

method

is used by replicas to execute any outstanding operations from the log. It takes

a closure that is executed for each outstanding entry, and a token to identify

the replica that calls exec.

Approximately, the method will do two things:

- Invoke the closure for every entry in the log which is in the sub-region

ltail..tailand afterwards setsltail = tail. - Finally, it may update the

ctailifltail > ctailto the new maximum.

Appending to the log

The append

operation

is invoked by replicas to insert mutable operations into the log. It takes a

slice of new operations coming from the replica and the same closure as in

exec to potentially apply some outstanding operations. This is necessary as

the log could be full and unable to insert more operations. Then, we'd first

have to update our own ltail a bit (or wait for other replicas to make

progress).

Approximately, the method will end up doing these steps:

- If there isn't enough space to insert our new batch of operations, try to consume some entries from the log until we have enough space available.

- Do a compare exchange on the log

tailto reserve space for our entries. - Insert entries into the log

- See if we can collect some garbage aka old log entries no longer used (next section).

Garbage collecting the log

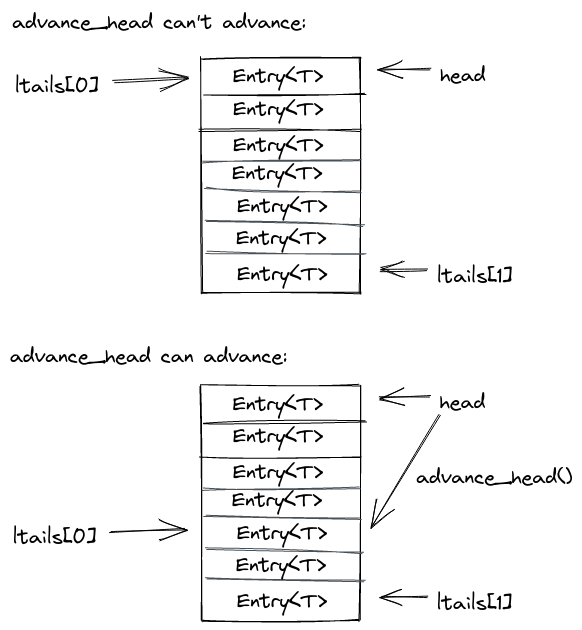

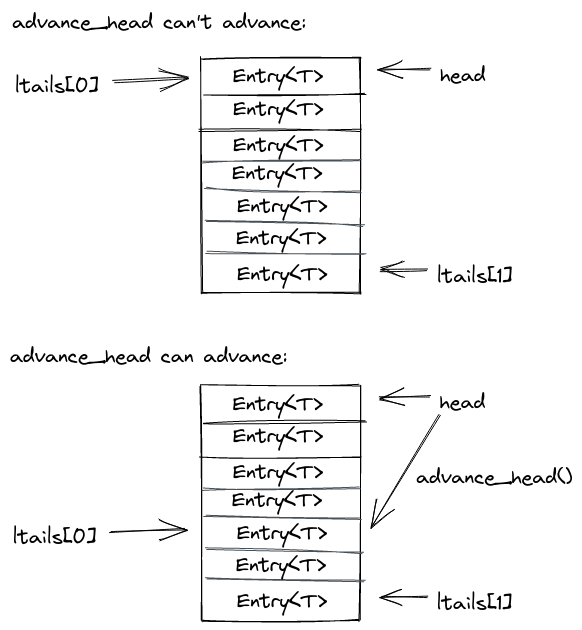

advance_head

has the unfortunate job to collect the log garbage. Luckily this amounts to as

little as periodically advancing the head pointer.

For that it computes the minium of all ltails. If this minimum still ends up

being the current head, it waits (and calls exec to try and advance its local

tail). Once the min ltail is bigger than the head, it will update the head to

that new minimum ltail and return.

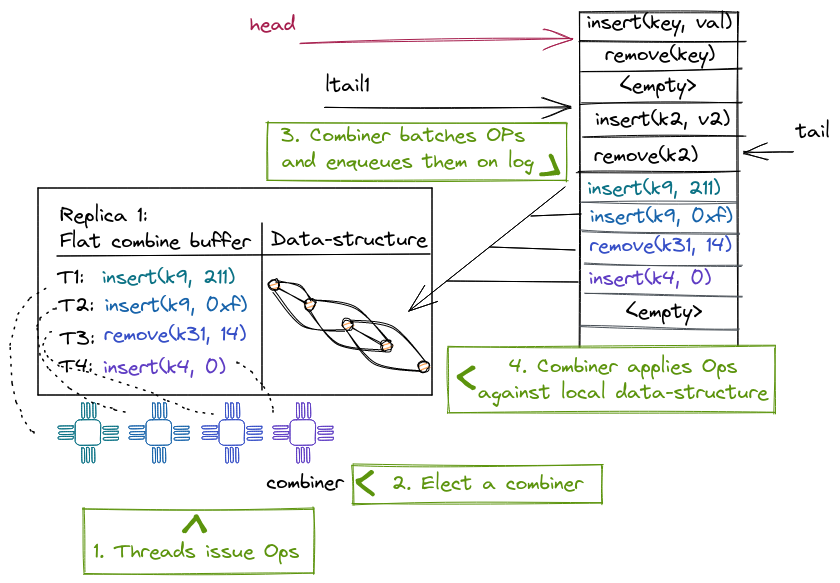

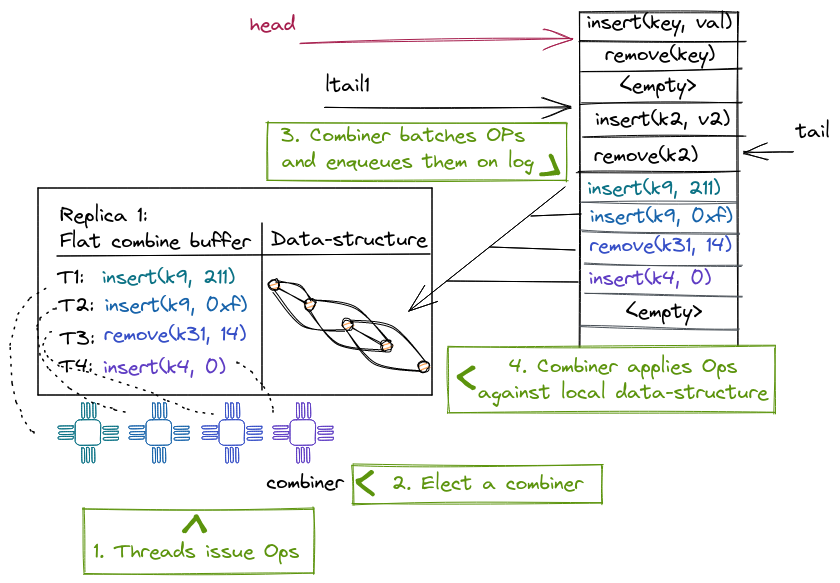

Flat combining

NR uses flat combining to allow threads running on the same socket to share a replica, resulting in better cache locality both from flat combining and from maintaining the replica local to the node's last-level cache.

-

The combiner can batch and combine multiple operations, resulting in a lower overall cost than executing each operation individually. For example, removing items from a priority queue can be done with a single atomic instruction for the whole batch instead of one atomic instruction for each operation.

-

A single thread accesses the data structure for multiple consecutive operations, ensuring cache locality for the whole batch of operations.

NR also benefits from combining operations placed on the log; the combiner does so using a single atomic operation for the whole batch instead of one atomic instruction per operation.

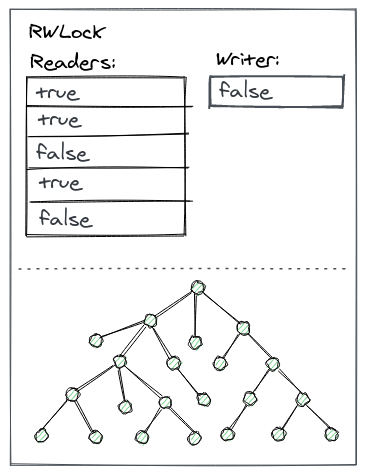

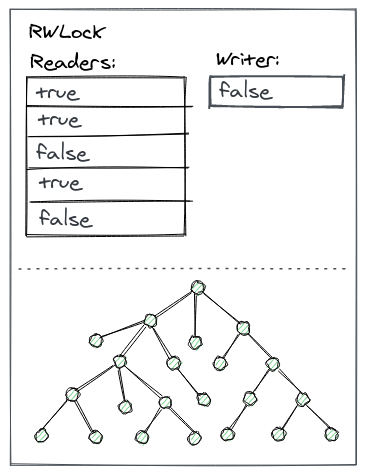

The optimized readers-writer lock

NR uses a writer-preference variant of the distributed RW lock to ensure the correct synchronization of the combiner and reader threads when accessing the sequential replica.

Each reader acquires a local per-thread lock; the writer has its own writer lock to indicate to the readers its intent to acquire the writer lock. A reader first checks the writer lock and then acquires its local lock if there is no writer; then it checks the writer lock again (while holding the lock) and releases the reader lock if it notices the writer declared its intent to acquire the lock (writer preference). The combiner also acquires the writer lock before modifying the replica. Thus, we give highest priority to the writer, because we don't want to delay update operations. This lock allows readers to read a local replica while the combiner is writing a batch of operations to the log, increasing parallelism.

If there is no combiner, a reader might have to acquire the writer lock to update the replica from the log to avoid a stale read, but this situation is rare.

A concurrent non-mutating operation (read)

A non-mutation operation (read) can execute on its thread's local replica, without creating a log entry, but it needs to ensure that the replica is not stale. To do so, it takes a snapshot of the log tail when the operation begins, and waits until a combiner updates the replica past the observed tail, or acquires the lock and updates the replica.

If there is no combiner, the read thread will acquire the writer lock and update the replica.

A concurrent mutating operation (update)

A thread T executing a mutating operation (update) needs to acquire the combiner lock. If the thread T executing this operation fails to acquire it, another thread is a combiner already and T spin-waits to receive its operation's result from the existing combiner.

However, T must still periodically attempt to become the combiner, in case the prior combiner missed T's operation.

If T acquires the lock, it becomes the combiner and executes all update operations of the concurrent threads on the same replica. To do so, T appends its operations to the shared log with a single atomic operation and ensures that the replica is up to date, before executing the operations and returning their results to the waiting threads.

Source and code example

Node-replication (NR) is released as a stand-alone library.

To give an idea, here is an example that transforms an single-threaded hash-table (from the Rust standard library) into a concurrent, replicated hash-table:

#![allow(unused)] fn main() { //! A minimal example that implements a replicated hashmap use std::collections::HashMap; use node_replication::Dispatch; use node_replication::Log; use node_replication::Replica; /// The node-replicated hashmap uses a std hashmap internally. #[derive(Default)] struct NrHashMap { storage: HashMap<u64, u64>, } /// We support mutable put operation on the hashmap. #[derive(Clone, Debug, PartialEq)] enum Modify { Put(u64, u64), } /// We support an immutable read operation to lookup a key from the hashmap. #[derive(Clone, Debug, PartialEq)] enum Access { Get(u64), } /// The Dispatch traits executes `ReadOperation` (our Access enum) /// and `WriteOperation` (our `Modify` enum) against the replicated /// data-structure. impl Dispatch for NrHashMap { type ReadOperation = Access; type WriteOperation = Modify; type Response = Option<u64>; /// The `dispatch` function applies the immutable operations. fn dispatch(&self, op: Self::ReadOperation) -> Self::Response { match op { Access::Get(key) => self.storage.get(&key).map(|v| *v), } } /// The `dispatch_mut` function applies the mutable operations. fn dispatch_mut(&mut self, op: Self::WriteOperation) -> Self::Response { match op { Modify::Put(key, value) => self.storage.insert(key, value), } } } }

As we can see we need to define two operation enums (Modify for mutable

operations that end up on the log, and Access for immutable/read operations).

Afterwards we need to implement the Dispatch trait from NR for our newly

defined data-structure that mainly is responsible to route the operations

defined in Access and Modify to the underlying data-structure. The full

example,

including how to create replicas and a log can be found in the NR repository.

Node Replication (NR)

NR creates linearizable NUMA-aware concurrent data structures from black-box sequential data structures. NR replicates the sequential data structure on each NUMA node and uses an operation log to maintain consistency between the replicas. Each replica benefits from read concurrency using a readers-writer lock and from write concurrency using a technique called flat combining. In a nutshell, flat combining batches operations from multiple threads to be executed by a single thread (the combiner). This thread also appends the batched operations to the log; other replicas read the log to update their internal states with the new operations.

Next, we explain in more detail the three main techniques that NR makes use of: operation logs, scalable reader-writer locks and flat combining.

The operation log

The log uses a circular buffer to represent the abstract state of the concurrent data structure. Each entry in the log represents a mutating operation, and the log ensures a total order. The log tail gives the index to the last operation added to the log.

A replica can lazily consume the log by maintaining a per-replica index into the log, indicating how far from the log the replica has been updated. The log is implemented as a circular buffer, so that entries can be garbage collected and reused. NR cannot garbage collect entries that have not been applied on each replica. This means at least one thread on each NUMA node must be executing operations on the data structure, otherwise the log can fill up and other replicas would not be able to make any more progress.

Next, we're going to explain the log in more detail for the curious reader (Warning: if you're not directly working on this, likely these subsections are pretty boring and can be skipped as well).

Log memory layout

The

log

contains an array or slice of entries, slog, where it stores the mutable

operations from the replicas. It also maintains a global head and tail which

are a subregion in slog, and indicate the current operations in the log that

still need to be consumed by at least one replica or have not been garbage

collected by the log. The head points to the oldest "active" entry that still

needs to be processed, and the tail to the newest. All replicas have their own

ltail which tracks per-replica progress. At any point in time, an ltail will

point somewhere in the subregion given by head..tail. lmasks are generation

bits: they are flipped whenever a replica wraps around in the circular buffer.

next is used internally by the library to hand out a registration tokens for

replicas that register with the log (this is done only in the beginning, during

setup). It tracks how many replicas are consuming from/appending to the log.

The ctail is an optimization for reads and tracks the max of all ltails:

e.g., it points to the log entry (< tail) after which there are no completed

operations on any replica yet.

Consuming from the log

The exec

method

is used by replicas to execute any outstanding operations from the log. It takes

a closure that is executed for each outstanding entry, and a token to identify

the replica that calls exec.

Approximately, the method will do two things:

- Invoke the closure for every entry in the log which is in the sub-region

ltail..tailand afterwards setsltail = tail. - Finally, it may update the

ctailifltail > ctailto the new maximum.

Appending to the log

The append

operation

is invoked by replicas to insert mutable operations into the log. It takes a

slice of new operations coming from the replica and the same closure as in

exec to potentially apply some outstanding operations. This is necessary as

the log could be full and unable to insert more operations. Then, we'd first

have to update our own ltail a bit (or wait for other replicas to make

progress).

Approximately, the method will end up doing these steps:

- If there isn't enough space to insert our new batch of operations, try to consume some entries from the log until we have enough space available.

- Do a compare exchange on the log

tailto reserve space for our entries. - Insert entries into the log

- See if we can collect some garbage aka old log entries no longer used (next section).

Garbage collecting the log

advance_head

has the unfortunate job to collect the log garbage. Luckily this amounts to as

little as periodically advancing the head pointer.

For that it computes the minium of all ltails. If this minimum still ends up

being the current head, it waits (and calls exec to try and advance its local

tail). Once the min ltail is bigger than the head, it will update the head to

that new minimum ltail and return.

Flat combining

NR uses flat combining to allow threads running on the same socket to share a replica, resulting in better cache locality both from flat combining and from maintaining the replica local to the node's last-level cache.

-

The combiner can batch and combine multiple operations, resulting in a lower overall cost than executing each operation individually. For example, removing items from a priority queue can be done with a single atomic instruction for the whole batch instead of one atomic instruction for each operation.

-

A single thread accesses the data structure for multiple consecutive operations, ensuring cache locality for the whole batch of operations.

NR also benefits from combining operations placed on the log; the combiner does so using a single atomic operation for the whole batch instead of one atomic instruction per operation.

The optimized readers-writer lock

NR uses a writer-preference variant of the distributed RW lock to ensure the correct synchronization of the combiner and reader threads when accessing the sequential replica.

Each reader acquires a local per-thread lock; the writer has its own writer lock to indicate to the readers its intent to acquire the writer lock. A reader first checks the writer lock and then acquires its local lock if there is no writer; then it checks the writer lock again (while holding the lock) and releases the reader lock if it notices the writer declared its intent to acquire the lock (writer preference). The combiner also acquires the writer lock before modifying the replica. Thus, we give highest priority to the writer, because we don't want to delay update operations. This lock allows readers to read a local replica while the combiner is writing a batch of operations to the log, increasing parallelism.

If there is no combiner, a reader might have to acquire the writer lock to update the replica from the log to avoid a stale read, but this situation is rare.

A concurrent non-mutating operation (read)

A non-mutation operation (read) can execute on its thread's local replica, without creating a log entry, but it needs to ensure that the replica is not stale. To do so, it takes a snapshot of the log tail when the operation begins, and waits until a combiner updates the replica past the observed tail, or acquires the lock and updates the replica.

If there is no combiner, the read thread will acquire the writer lock and update the replica.

A concurrent mutating operation (update)

A thread T executing a mutating operation (update) needs to acquire the combiner lock. If the thread T executing this operation fails to acquire it, another thread is a combiner already and T spin-waits to receive its operation's result from the existing combiner.

However, T must still periodically attempt to become the combiner, in case the prior combiner missed T's operation.

If T acquires the lock, it becomes the combiner and executes all update operations of the concurrent threads on the same replica. To do so, T appends its operations to the shared log with a single atomic operation and ensures that the replica is up to date, before executing the operations and returning their results to the waiting threads.

Source and code example

Node-replication (NR) is released as a stand-alone library.

To give an idea, here is an example that transforms an single-threaded hash-table (from the Rust standard library) into a concurrent, replicated hash-table:

#![allow(unused)] fn main() { //! A minimal example that implements a replicated hashmap use std::collections::HashMap; use node_replication::Dispatch; use node_replication::Log; use node_replication::Replica; /// The node-replicated hashmap uses a std hashmap internally. #[derive(Default)] struct NrHashMap { storage: HashMap<u64, u64>, } /// We support mutable put operation on the hashmap. #[derive(Clone, Debug, PartialEq)] enum Modify { Put(u64, u64), } /// We support an immutable read operation to lookup a key from the hashmap. #[derive(Clone, Debug, PartialEq)] enum Access { Get(u64), } /// The Dispatch traits executes `ReadOperation` (our Access enum) /// and `WriteOperation` (our `Modify` enum) against the replicated /// data-structure. impl Dispatch for NrHashMap { type ReadOperation = Access; type WriteOperation = Modify; type Response = Option<u64>; /// The `dispatch` function applies the immutable operations. fn dispatch(&self, op: Self::ReadOperation) -> Self::Response { match op { Access::Get(key) => self.storage.get(&key).map(|v| *v), } } /// The `dispatch_mut` function applies the mutable operations. fn dispatch_mut(&mut self, op: Self::WriteOperation) -> Self::Response { match op { Modify::Put(key, value) => self.storage.insert(key, value), } } } }

As we can see we need to define two operation enums (Modify for mutable

operations that end up on the log, and Access for immutable/read operations).

Afterwards we need to implement the Dispatch trait from NR for our newly

defined data-structure that mainly is responsible to route the operations

defined in Access and Modify to the underlying data-structure. The full

example,

including how to create replicas and a log can be found in the NR repository.

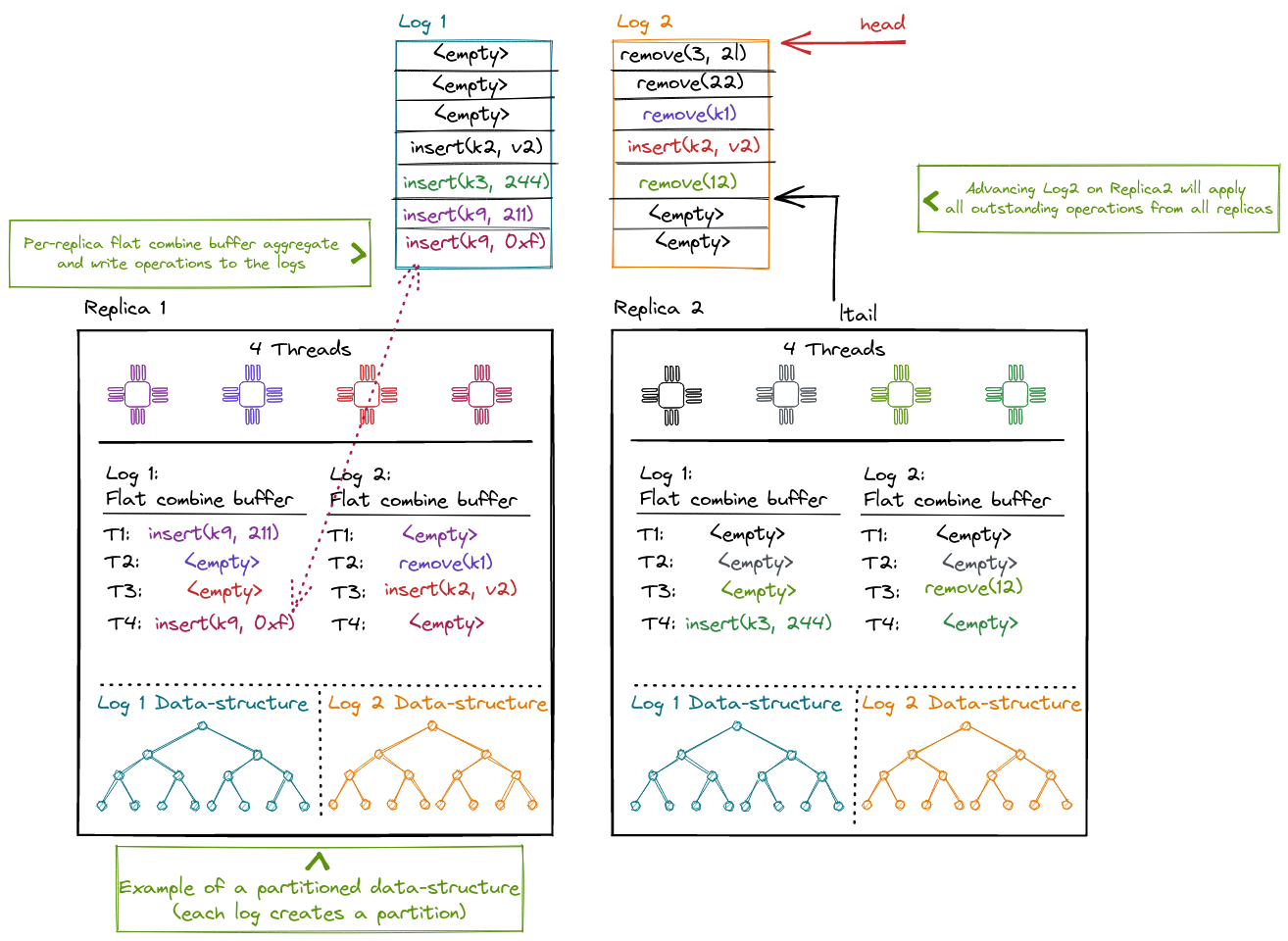

Concurrent Node Replication (CNR)

Some OS subsystems can become limited by node-replications (NR) single log if operations are frequently mutating but would otherwise naturally commute.

NR allows multiple combiners from different replicas to make progress in parallel, but its write-scalability is limited because

- all combiner threads are operating on a single, shared log; and

- each replica is a sequential data structure, which requires protection using a readers-writer lock.

To address these problems, we can use CNR, a technique that extends the original NR approach by leveraging operation commutativity present in certain data structures. Two operations are said to be commutative if executing them in either order leaves the data structure in the same abstract state. Otherwise, we say operations are conflicting.

As NR, CNR replicates a data structure across NUMA nodes and maintains the consistency of the replicas. However, CNR uses commutativity to scale the single NR shared log to multiple logs, by assigning commutative operations to different logs, while ensuring that conflicting operations always use the same log and thus have a total order. In addition, CNR can use concurrent or partitioned data structures for replicas, which allows for multiple concurrent combiners on each replica -- one for each shared log. This eliminates the per-replica readers-writer lock and scales access to the data structure.

CNR lifts an already concurrent data structure to a NUMA-aware concurrent data structure. The original data structure can be a concurrent (or partitioned) data structure that works well for a small number of threads (4-8 threads) within a single NUMA node. This data structure can be lock-free or lock-based and may result in poor performance under contention. CNR produces a concurrent data structure that works well for a large number of threads (e.g., 100s of threads) across NUMA nodes, and that is resilient to contention.

In CNR a replica can distribute commuting operations among different logs. Each replica maintains one flat-combining buffer per log where operations are aggregated. One elected combiner thread commits all outstanding operation in a batch to the log and then applies the ops against the local data-structure.

Compared to NR, the replicated data-structure is no longer protected by a single reader-writer lock by default. Instead, the data-structure can be partitioned (per-log) as in this diagram, use a lock-free approach, or rely on locking.

CNR Operations and Linearizability

CNR is useful for search data structures, with operations insert(x),

remove(x), lookup(x) and range-scan(x, y). These operations often benefit

from commutativity, which depends both on the abstract operation type and on its

input arguments. Similar to transactional

boosting, CNR considers the

abstract data type for establishing commutativity, not the concrete data

structure implementation.

Consider, for example, the insert(x) operation. Two operations are commutative

if they operate on distinct arguments: e.g., insert(x) and insert(y) are

commutative if x != y. A concrete implementation of the data structure could

be a sorted linked list. One might think that insert(x) and insert(x+1) are

not commutative because they operate on shared memory locations. However, the

original data structure already safely orders accesses to shared memory

locations, due to its concurrent nature. Hence, these operations commute for CNR

and can be safely executed concurrently.

Interface

CNR's interface is similar to NR, but it adds operation classes to express commutativity for the mutable and immutable operations. CNR relies on the provided data structure to identify conflicting operations by assigning them to the same operation class. CNR uses this information to allocate conflicting operations to the same shared log and, if they execute on the same NUMA node, to the same combiner too. In contrast, commutative operations can be executed by different combiners and can use different shared logs, allowing them to be executed concurrently.

As with NR, CNR executes different steps for mutating (update) and non-mutating (read) operations. Each of these operations uses only one of the multiple logs and is linearized according to its log's order. Different logs are not totally ordered, but operations belonging to different logs are commutative.

In addition, CNR special-cases another type of operation, scan, which belongs to more than one operation class. These are operations that conflict with many other operations (e.g., a length operation has to read the entire data structure to determine the size). If we assign this operation to a single class, all other operations need to be in the same class, eliminating the commutativity benefit.

Scan-type operations span multiple logs and need a consistent state of the replica across all the logs involved in the operation, obtained during the lifetime of the scan operation. To obtain a consistent state, the thread performing the scan collects an atomic snapshot of the log tails by inserting the operation in these logs. This atomic snapshot becomes the scan's linearization point.

We show the CNR API, with the additional traits implemented for expressing operation classes using our earlier example in the NR section:

#![allow(unused)] fn main() { use chashmap::CHashMap; use cnr::{Dispatch, LogMapper}; /// The replicated hashmap uses a concurrent hashmap internally. pub struct CNRHashMap { storage: CHashMap<usize, usize>, } /// We support a mutable put operation on the hashmap. #[derive(Debug, PartialEq, Clone)] pub enum Modify { Put(usize, usize), } /// This `LogMapper` implementation distributes the keys amoung multiple logs /// in a round-robin fashion. One can change the implementation to improve the /// data locality based on the data sturucture layout in the memory. impl LogMapper for Modify { fn hash(&self, nlogs: usize, logs: &mut Vec<usize>) { debug_assert!(logs.capacity() >= nlogs, "guarantee on logs capacity"); debug_assert!(logs.is_empty(), "guarantee on logs content"); match self { Modify::Put(key, _val) => logs.push(*key % nlogs), } } } /// We support an immutable read operation to lookup a key from the hashmap. #[derive(Debug, PartialEq, Clone)] pub enum Access { Get(usize), } /// `Access` follows the same operation to log mapping as the `Modify`. This /// ensures that the read and write operations for a particular key go to /// the same log. impl LogMapper for Access { fn hash(&self, nlogs: usize, logs: &mut Vec<usize>) { debug_assert!(logs.capacity() >= nlogs, "guarantee on logs capacity"); debug_assert!(logs.is_empty(), "guarantee on logs content"); match self { Access::Get(key) => logs.push(*key % nlogs), } } } /// The Dispatch traits executes `ReadOperation` (our Access enum) /// and `WriteOperation` (our Modify enum) against the replicated /// data-structure. impl Dispatch for CNRHashMap { type ReadOperation = Access; type WriteOperation = Modify; type Response = Option<usize>; /// The `dispatch` function applies the immutable operations. fn dispatch(&self, op: Self::ReadOperation) -> Self::Response { match op { Access::Get(key) => self.storage.get(&key).map(|v| *v), } } /// The `dispatch_mut` function applies the mutable operations. fn dispatch_mut(&self, op: Self::WriteOperation) -> Self::Response { match op { Modify::Put(key, value) => self.storage.insert(key, value), } } } }

CNR is available as a stand-alone rust library together with the NR code on github.

Comparison to NR and Notation

CNR benefits from the NR techniques, such as flat combining and operation logs with a succinct operation description, which reduce contention and inter-NUMA synchronization.

As NR, CNR has two drawbacks: increased memory footprint and increased computational cost from re-executing each operation on each replica. CNR has similar footprint to NR, because the single shared log can be split into multiple, smaller shared logs. However, CNR increases parallelism within each NUMA node by using a concurrent replica with multiple combiners and increases parallelism across NUMA nodes by using multiple (mostly) independent shared logs.

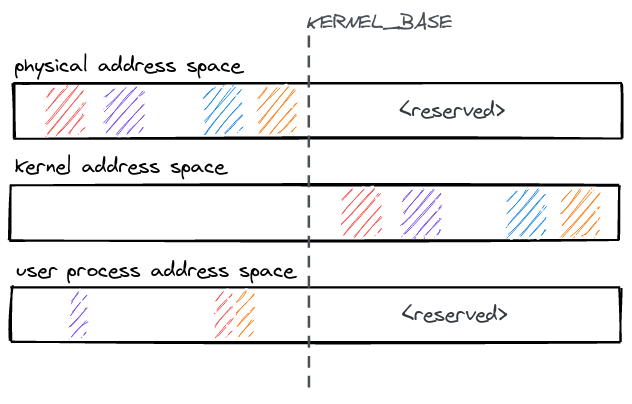

Memory

Kernel address space

The kernel address space layout follows a simple scheme: All physical memory is

identity mapped with a constant offset KERNEL_BASE in the kernel address

space. Therefore, any physical address can be accessed in the kernel by adding

KERNEL_BASE to it.

All physical memory is always accessible in the kernel and does not need to

mapped/unmapped at runtime. The kernel binary is linked as position independent

code, it is loaded into physical memory and then relocated to run at the kernel

virtual address (KERNEL_BASE + physical address).

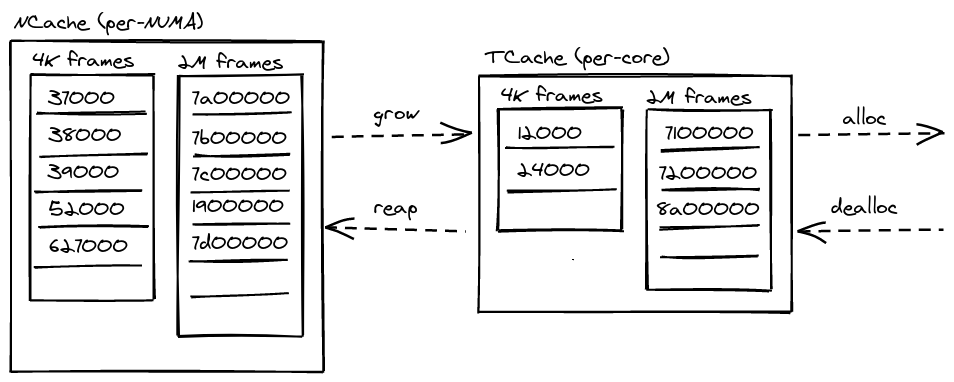

Physical memory

Physical memory allocation and dynamic memory allocation for kernel data structures are two basic subsystems that do not rely on NR. Replicated subsystems often require physical frames, but that allocation operation itself should not be replicated. For example, when installing a mapping in a page table, each page table entry should refer to the same physical page frame on all replicas (though, each replica should have its own page tables). If allocator state were replicated, each allocation operation would be repeated on each replica, breaking this.

At boot time, the affinity for memory regions is identified, and memory is divided into per-NUMA node caches (FrameCacheLarge). The FrameCacheLarge statically partitions memory further into two classes of 4 KiB and 2 MiB frames. Every core has a local FrameCacheSmall of 4 KiB and 2 MiB frames for fast, no-contention allocation when it contains the requested frame size. If it is empty, it refills from its local FrameCacheLarge. Similar to slab allocators, NRK' FrameCacheSmall and FrameCacheLarge implement a cache frontend and backend that controls the flow between TCaches and NCaches.

Dynamic memory

Since NRK is implemented in Rust, memory management is greatly simplified by relying on the compiler to track the lifetime of allocated objects. This eliminates a large class of bugs (use-after-free, uninitialized memory etc.), but the kernel still has to explicitly deal with running out of memory. NRK uses fallible allocations and intrusive data structures to handle out-of-memory errors gracefully.

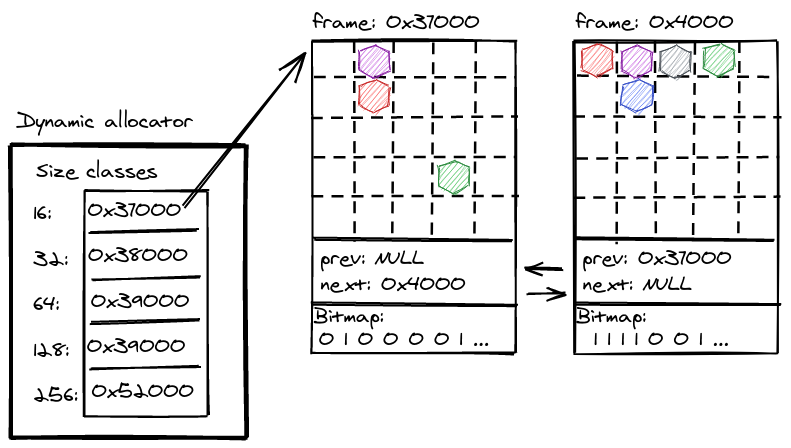

The dynamic memory allocator in nrk provides an implementation for the Rust global allocator interface. It uses size classes and different allocators per class (e.g., it's a segregated-storage allocator), while incorporating some of the simple and effective ideas from slab allocation: For each size class, 2MiB or 4 KiB frames are used which are sliced into equal sized objects of a given class. A bitfield at the end of every frame tracks the meta-data for objects within the frame (e.g., to determine if its allocated or not).

Deterministic memory

The kernel has to explicitly handle running out of memory. nrk uses fallible allocations to handle out-of-memory errors gracefully by returning an error to applications. We do this in almost all cases in the kernel (with some exceptions during initializations etc.) but some of the 3rd party dependencies (e.g., to parse ELF binaries) are not completely converted to fallible allocations yet.

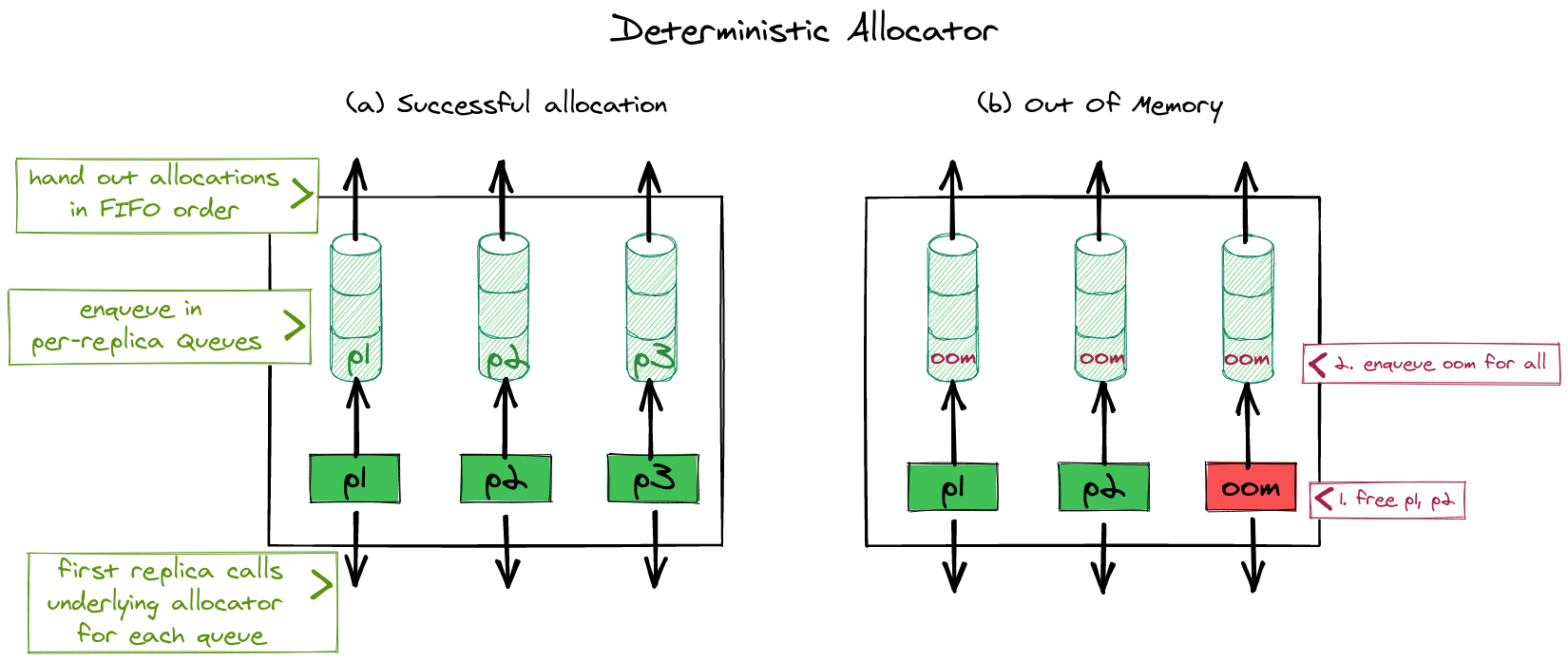

Another issue is that handling out-of-memory errors in presence of replicated data-structures becomes a little more challenging: Allocations which happen to store replicated state must be deterministic (e.g. they should either succeed on all replicas or none). Otherwise, the replicas would end up in an inconsistent state if after executing an operation, some replicas had successful and some had unsuccesful allocations. Making sure that all replicas always have equal amounts of memory available is infeasible because every replica replicates at different times and meanwhile allocations can happen on other cores for unrelated reasons. We solve this problem in nrk by requiring that all memory allocations for state within NR or CNR must go through a deterministic allocator. In the deterministic allocator, the first replica that reaches an allocation request allocates memory on behalf of all other replicas too. The deterministic allocator remembers the results temporarily, until they are picked up by the other replicas which are running behind. If an allocation for any of the replica fails, the leading replica will enqueue the error for all replicas, to ensure that all replicas always see the same result. Allocators in nrk are chainable and it is sufficient for the deterministic allocator to be anywhere in the chain so it doesn't necessarily have to be invoked for every fine-grained allocation request. Our implementation leverages custom allocators in Rust, which lets us override which heap allocator is used for individual data-structures.

Process structure

Virtual memory

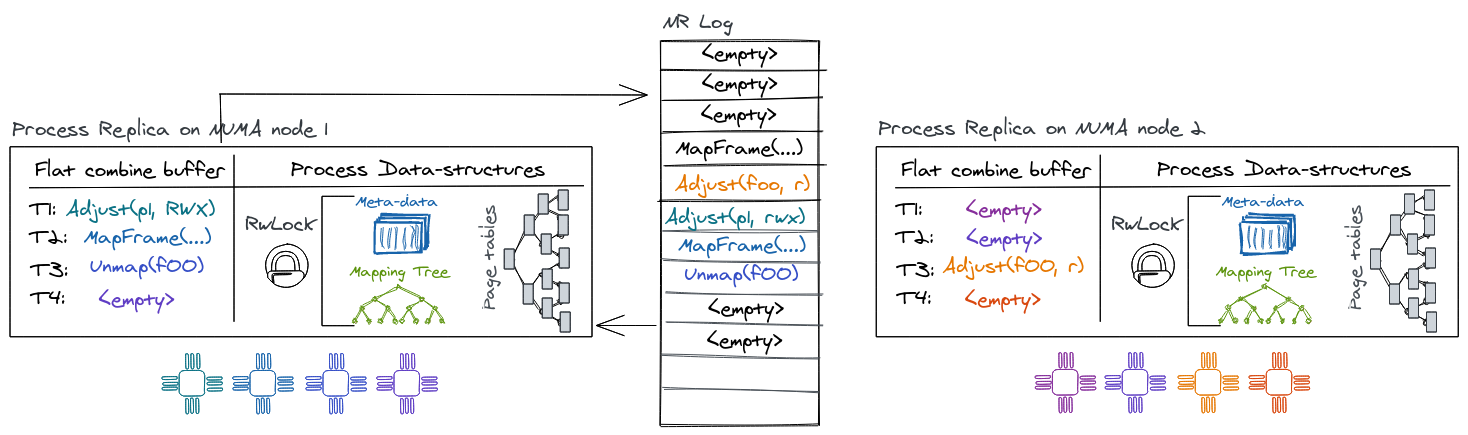

NRK relies on the MMU for isolation. Like most conventional virtual memory implementations, NRK uses a per-process mapping database (as a B-Tree) to store frame mappings which is used to construct the process's hardware page tables. NRK currently does not support demand paging. Both the B-Tree and the hardware page tables are simple, sequential data structures that are wrapped behind the NR interface for concurrency and replication.

Therefore, the mapping database and page tables are replicated on every NUMA

node, forming the biggest part of the process abstraction. A process exposes the

following mutating operations to modify its address space: MapFrame (to

insert a mapping); Unmap (to remove mappings); and Adjust (to change

permissions of a mapping). The virtual memory system also supports a

non-mutating Resolve operation (that advances the local replica and queries

the address space state).

Virtual memory and NR

There are several aspects of the virtual-memory design that are influenced by its integration with NR:

For example, the virtual-memory has to consider out-of-band read accesses by

cores' page table walkers. Normally a read operation would go through the NR

interface, ensuring replay of all outstanding operations from the log first.

However, a hardware page table walker does not have this capability. A race

arises if a process maps a page on core X of replica A and core Y of replica B

accesses that mapping in userspace before replica B has applied the update.

Luckily, this can be handled since it generates a page fault. In order to

resolve this race, the page-fault handler advances the replica by issuing a

Resolve operation on the address space to find the corresponding mapping of

the virtual address generating the fault. If a mapping is found, the process can

be resumed since the Resolve-operation will apply outstanding operations. If

no mapping is found, the access was an invalid read or write by the process.

Unmap or Adjust (e.g., removing or modifying page-table entries) requires

the OS to flush TLB entries on cores where the process is active to ensure TLB

coherence. This is typically done in software, by the OS, and commonly referred

to as performing a TLB shootdown. The initiator core will start by enqueuing the

operation for the local replica. After NR returns we know that the unmap (or

adjust) operation has been performed at least against the local page-table

replica and is enqueued as a future operation on the log for other replicas.

Next, we send inter-processor interrupts (IPIs) to trigger TLB flushes on all

cores running the corresponding process. As part of the IPI handler the cores

will first acknowledge the IPI to the initiator, next they must make sure to

advance their local replica with outstanding log operations (which forces the

unmap/adjust if not already applied), then poll a per-core message queue to get

information about the regions that need to be flushed, and finally perform the

TLB invalidation. Meanwhile the initiator will invalidate its own TLB entries

and then wait for all outstanding acknowledgments from other cores before it can

return to user-space.

Scheduler

In NRK, the kernel-level scheduler is a coarse-grained scheduler that allocates CPUs to processes. Processes make system calls to request for more cores and to give them up. The kernel notifies processes core allocations and deallocations via upcalls. To run on a core, a process allocates executor objects (i.e., the equivalent of a "kernel" thread) that are used to dispatch a given process on a CPU. An executor mainly consists of two userspace stacks (one for the upcall handler and one for the initial stack) and a region to save CPU registers and other metadata. Executors are allocated lazily but a process keeps a per-NUMA node cache to reuse them over time.

In the process, a userspace scheduler reacts to upcalls indicating the addition or removal of a core, and it makes fine-grained scheduling decisions by dispatching threads accordingly. This design means that the kernel is only responsible for coarse-grained scheduling decisions, and it implements a global policy of core allocation to processes.

The scheduler uses a sequential hash table wrapped with NR to map each process id to a process structure and to map process executors to cores. It has operations to create or destroy a process; to allocate and deallocate executors for a process; and to obtain an executor for a given core.

File System

The NrFS is a simple, in-memory file system in nrk that supports some POSIX file

operations (open, pread, pwrite, close, etc.).

NrFS tracks files and directories by mapping each path to an inode number and then mapping each inode number to an in-memory inode. Each inode holds either directory or file metadata and a list of file pages. The entire data structure is wrapped by CNR for concurrent access and replication.

User Space

The main user-space components are explained in more details in this chapter.

KPI: Kernel Public Interface

The Kernel Public Interface (KPI) is the lowest level user-space library that an application links with. As the name suggests it is the common interface definition between the kernel and user-space programs. It is special because it is the only library that is shared between user and kernel code.

The KPI contains the syscall interface and various struct definitions that are exchanged between the kernel and user-space. If in the future, we care about ABI compatibility, we would not try to keep the syscall ABI compatible but would rather enforce compatibility at the KPI boundary.

Typically, the KPI functionality will rarely be accessed directly by an

application. Instead, many parts of it are re-exported or wrapped by the

vibrio library OS. The kpi code is found in lib/kpi.

Lineup

Lineup is a user-space, cooperative thread scheduler that runs green-threads

(user-level threads). It supports many synchronization primitives (mutex,

rwlock, conditional variables, barriers etc.), thread-local storage, and has

some basic support for multi-threading. It uses

fringe for compiler-assisted

context-switching. The scheduler code is found in lib/lineup.

Upcalls

The kernel can notify the scheduler about events through an up-call mechanism, for example to notify about more available cores (or removal of cores), to forward device interrupts, or page-faults from kernel to user-space.

The mechanism for this is inspired by scheduler activations: The kernel and user-space program agree on a common save area to store a CPU context (on a per-core basis). If an event arrives at the kernel, it will save the current CPU context (registers etc.) in the agreed upon save-area and resume the process with a new (mostly empty) context that invokes the pre-registered upcall handler instead. The upcall handler gets all information about the event that triggered the interruption through function arguments so it can then take appropriate measures to react. After the event is handled, the upcall handler can read the previous context (from before the interruption) from the common save area and decide to resume where computation left off before the upcall (or decide not to continue with this context).

Vibrio

Virbio is the user-space library that provides most of the functionality

necessary to run applications in user-space. It is found in lib/vibrio.

Memory

The user-space memory manager provides a malloc and free like interface for

C code and a GlobalAlloc implementation for rust programs. We rely on the

same allocator that the kernel

uses for small--medium sized blocks (between 0 and 2 MiB). Everything else is

mapped directly by allocating memory with the map syscall.

RumpRT

A rumpkernels is a componentized NetBSD kernel that can run in many different environments. It contains file systems, a POSIX system call interface, many PCI device drivers, a SCSI protocol stack, virtio, a TCP/IP stack, libc and libpthread and more.

Vibrio has a rumprt module which provides the necessary low-level interface to

run a rumpkernel inside a user-space process (e.g., the

rumpuser API and some more). This has the

advantage that it's possible to run many POSIX compatible programs out of the

box without building a fully-fledged POSIX compatibility layer into NrOS.

- Bare-metal and Xen implementations for rumprun

- Some supported applications

- PhD thesis about rumpkernels

Vibrio dependency graph

Vibrio uses the following crates / dependencies:

vibrio

├── arrayvec

├── bitflags

├── crossbeam-utils

├── cstr_core

├── hashbrown

├── kpi

├── lazy_static

├── lineup

├── log

├── rawtime

├── rumpkernel

├── serde_cbor

├── slabmalloc

├── spin

└── x86

rkapps

The rkapps directory (in usr/rkapps) is an empty project aside from the

build.rs file. The build file contains the steps to clone and build a few

different well-known programs (memcached, LevelDB, Redis etc.) that we use to

test user-space and rumprt.

The checked-out program sources and binaries are placed in the following location as part of your build directory:

target/x86_64-nrk-none/<debug | release>/build/rkapps-$HASH/out/

The build for these programs can be a bit hard to understand. The following steps happen:

- Clone the packages repo which has build instructions for different POSIX programs running on rumpkernels.

- For each application that we want to build (enabled/disabled by feature

flags): Run

makein the respective directory. This will compile the application with the appropriate rumpkernel toolchain. The toolchain be found in a similar path inside the build directory:target/x86_64-nrk-none/<debug | release>/build/rumpkernel-$HASH - Linking binaries with vibrio which provides the low-level runtime for rumpkernels.

For more information on how to run rkapps applications, refer to the Benchmarking section.

Development

This chapter should teach you how to Build, Run, Debug, Test and Trace the OS.

Configuration

Some tips and pointers for setting up and configuring the development environment.

VSCode

VSCode generally works well for developing nrk. The rust-analyzer plugin is

preferred over rls which often has build issues due to the project not having

a std runtime (no-std).

Git

For first time git users or new accounts, you'll have to configure your username and email:

git config --global user.name "Gerd Zellweger"

git config --global user.email "mail@gerdzellweger.com"

To have better usability when working with submodules, you can configure git to

update submodules automatically when doing a git pull etc.

git config --global submodule.recurse true

Fetch multiple submodules in parallel:

git config --global submodule.fetchJobs 20

We don't allow merge requests on master, to always keep a linear history. The following alias can be helpful:

[alias]

purr = pull --rebase

Adding a new submodule to the repository

cd libgit submodule add <path-to-repo> <foldername>

Removing a submodule in the repository

- Delete the relevant section from the .gitmodules file.

- Stage the .gitmodules changes:

git add .gitmodules. - Delete the relevant section from .git/config.

- Run

git rm --cached path_to_submodule(no trailing slash). - Run

rm -rf .git/modules/path_to_submodule(no trailing slash). - Commit changes

Styleguide

Code format

We rely on rustfmt to automatically format our code.

Code organization

We organize/separate imports into three blocks (all separated by one newline):

- 1st block for core language things:

core,alloc,stdetc. - 2nd block for libraries:

vibrio,x86,lazy_staticetc. - 3rd block for internal imports:

crate::*,super::*etc. - 4th block for re-exports:

pub(crate) use::*etc. - 5th block for modules:

mod foo;etc.

Afterwards a .rs file should (roughly) have the following structure:

- 1st

typedeclarations - 2nd

constdeclarations - 3rd

staticdeclarations - 4th

struct,fn,impletc. declarations

Visibility

Avoid the use of pub in the kernel. Use pub(crate), pub(super) etc. This

helps with dead code elimination.

Assembly

We use AT&T syntax for assembly code (options(att_syntax) in Rust asm!

blocks)

Cargo features

Libraries and binaries only have non-additive / non-conflicting feature flags.

This helps to spot compilation problems quickly (e.g. with cargo build --all-features)

Errors

The KError type is used to represent errors in the kernel. Whenever possible,

each variant should only be used once/in a single location (to be easy to grep

for) and should have a descriptive name.

Formatting Commit Messages

We follow the conventions on How to Write a Git Commit Message.

Be sure to include any related GitHub issue references in the commit message. See GFM syntax for referencing issues and commits.

Github pull requests & history

Since github doesn't do fast-forward merges through the UI, after PR passes test, merge it on the command line to keep the same commit hashes of the branch in master:

git checkout master

git merge --ff-only feature-branch-name

Building

There are two sets of dependencies required for the development process: build and run dependencies. We typically build, develop and test using the latest Ubuntu LTS version and run nrk in QEMU. Other Linux systems will probably work but might require a manual installation of all dependencies. Other operating systems likely won't work out of the box without some adjustments for code and the build-process.

Check-out the source tree

Check out the nrk sources first:

git clone <repo-url>

cd nrk

The repository is structured using git submodules. You'll have to initialize and check-out the submodules separately:

In case you don't have the SSH key of your machine registered with a github account, you need to convert all submodule URLs to use the https protocol instead of SSH, to do so run this sed script before proceeding:

sed -i'' -e 's/git@github.com:/https:\/\/github.com\//' .gitmodules

git submodule update --init

Dependencies

If you want to build without Docker, you

can install both build and run dependencies by executing setup.sh in the root

of the repository directly on your machine (this requires the latest Ubuntu

LTS). The script will install all required OS packages, install Rust using

rustup and some additional rust programs and

dependencies. To run rackscale integration tests, you will also have to install

the DCM-based scheduler dependencies.

The build dependencies can be divided into these categories

- Rust (nightly) and the

rust-srccomponent for compiling the OS python3(and some python libraries) to execute the build and run script- Test dependencies (qemu, corealloc, dhcpd, redis-benchmark, socat, graphviz etc.)

- Rumpkernel dependencies (gcc, zlib1g etc.)

- Build for documentation (mdbook)

See scripts/generic-setup.sh function install_build_dependencies for

details.

Use Docker

We provide scripts to create a docker image which contains all build dependencies already.

To use Docker, it needs to be installed in your system. On Ubuntu execute the following steps:

sudo apt install docker.io sudo service docker restart sudo addgroup $USER docker newgrp docker

To create the image execute the following command in the /scripts directory.

bash ./docker-run.sh

This will create the docker image and start the container. You will be dropped into a shell running inside the Docker container. You can build the OS as if you had installed the dependencies natively.

The script will create a user inside the docker container that corresponds to the user on the host system (same username and user ID).

You can rebuild the image with:

bash ./docker-run.sh force-build

To exit the container, just type exit to terminate the shell.

Build without running

To just build the OS invoke the run.py script (in the kernel directory) with

the -n parameter (no-run flag).

python3 kernel/run.py -n

If you want to run the build in a docker container, run bash ./scripts/docker-run.sh beforehand. The source directory tree will be mounted

in the docker container in /source.

Using run.py

The kernel/run.py script provides a simple way to build, deploy and run the

system in various settings and configuration. For a complete set of parameters

and config options refer to the run.py --help instructions.

As an example, the following invocation

python3 run.py --kfeatures test-userspace --cmd='log=info init=redis.bin' --mods rkapps init --ufeatures rkapps:redis --machine qemu --qemu-settings='-m 1024M' --qemu-cores 2

will

- compile the kernel with Cargo feature

test-userspace - pass the kernel the command-line arguments

log=info init=redis.binon start-up (sets logging to info and starts redis.bin for testing) - Compile two user-space modules

rkapps(with cargo feature redis) andinit(with no features) - Deploy and run the compiled system on

qemuwith 1024 MiB of memory and 2 cores allocated to the VM

If Docker is used as build environment, it is necessary to first compile the system with the required features inside the Docker container:

python3 run.py --kfeatures test-userspace --mods rkapps init --ufeatures rkapps:redis -n

Afterwards, the aforementioned command can be used to run NRK outside the

Docker container with the given configuration. The run.py script will

recognize that the system has already been build and will directly start

qemu.

Sometimes it's helpful to know what commands are actually executed by run.py.

For example to figure out what the exact qemu command line invocation was. In

that case, --verbose can be supplied.

Depending on the underlying system configuration NRK may abort because a connection to the local network can not be established. In this case, the following steps can help to resolve this issue:

- Disable AppArmor. Detailed instructions can be found here.

- Manually start the DHCP server immediately after NRK has started:

sudo dhcpd -f -d tap0 --no-pid -cf ./kernel/tests/dhcpd.conf

Baremetal execution

The kernel/run.py script supports execution on baremetal machines with

the --machine argument:

python3 run.py --machine b1542 --verbose --cmd "log=info"

This invocation will try to run nrk on the machine described by a

b1542.toml config file.

A TOML file for a machine has the following format:

[server]

# A name for the server we're trying to boot

name = "b1542"

# The hostname, where to reach the server

hostname = "b1542.test.com"

# The type of the machine

type = "skylake2x"

# An arbitrary command to set-up the PXE boot enviroment for the machine

# This often involves creating a hardlink of a file with a MAC address

# of the machine and pointing it to some pxe boot directory

pre-boot-cmd = "./pxeboot-configure.sh -m E4-43-4B-1B-C5-DC -d /home/gz/pxe"

# run.py support only booting machines that have an idrac management console:

[idrac]

# How to reach the ilo/iDRAC interface of the machine

hostname = "b1542-ilo.test.com"

# Login information for iDRAC

username = "user"

password = "pass"

# Serial console which we'll read from

console = "com2"

# Which iDRAC version we're dealing with (currently unused)

idrac-version = "3"

# Typical time until machine is booted

boot-timeout = 320

[deploy]

# Server where binaries are deployed for booting with iPXE

hostname = "ipxe-server.test.com"

username = "user"

ssh-pubkey = "~/.ssh/id_rsa"

# Where to deploy kernel and user binaries

ipxe-deploy = "/home/gz/public_html/"

An iPXE environment that the machine will boot from needs to be set-up. The iPXE bootloader should be compiled with UEFI and ELF support for running with nrk.

Note that the current support for bare-metal execution is currently limited to DELL machines with an iDRAC management console (needed to reboot the server). Ideally, redfish or SNMP support will be added in the future.

Compiling the iPXE bootloader

TBD.

Debugging

Currently the debugging facilities are not as good as on a production operating

systems. However, there are some options available: gdb, printf-style

debugging, logging and staring at code. We will discuss the options in this

chapter.

GDB support in the kernel

tldr: To use gdb, add

--kgdbtorun.py.

NRK provides an implementation for the gdb remote protocol using a separate serial line for communication. This means you can use gdb to connect to the running system.

To use it, start run.py with the --kgdb argument. Once booted, the following

line will appear:

Waiting for a GDB connection on I/O port 0x2f8...

Use `target remote localhost:1234` in gdb session to connect

Next, connect with GDB to the kernel, using:

$ cd kernel

$ gdb ../target/x86_64-uefi/<debug | release>/esp/kernel

[...]

(gdb) target remote localhost:1234

Remote debugging using localhost:1234

[...]

If you execute gdb in the kernel directory, the

.gdbinitfile there already executestarget remote localhost:1234for you. But you have to add the kernel directory as a "trusted" path by adding this line to$HOME/.gdbinit:add-auto-load-safe-path <REPO-BASE>/kernel/.gdbinit

The GDB dashboard works as well,

just insert target remote localhost:1234 at the top of the .gdbinit file.

Breakpoints

tldr: use

breakorhbreakin gdb.

Currently the maximum limit of supported breakpoints (and watchpoints) is four.

Why? Because we use the x86-64 debug registers for breakpoints and there are only 4 such registers. Our gdb stub implements both software and hardware breakpoints with the debug registers.

An alternative technique would be to either insert int3 into .text for

software interrupts (or let gdb do it automatically if software interrupts are

marked as not supported by the stub). However, this is a bit more complicated

because we need to prevent cascading breakpoints (e.g., the debug interrupt

handler should ideally not hit a breakpoint while executing). With only debug

registers, this is fairly easy to achieve, as we just have disable them on entry

and re-enable them when we resume, whereas the int3 approach would involve

patching/reverting a bunch of .text offsets. On the plus side it would enable

an arbitrary amount of breakpoints if this ever becomes necessary.

Watchpoints

Again the maximum limit is four watchpoints (and breakpoints) at the same time.

Use watch -l <variable> to set a watchpoint. The -l option is important

because it watches the memory location of the variable/expression rather than

the expression. Normal watch is not supported as gdb may try to overwrite

.text locations (which are mapped only as read-execute) in the kernel.

printf debugging with the log crate

Here are a few tips:

- Change the log-level of the kernel to info, debug, or even trace:

python3 run.py --cmd='log=info' - Logging can also be enabled per-module basis. For example, to enable trace

output for just the

gdbstublibrary and thegdbmodule in the kernel this is how the necessary--cmdinvocation forrun.pywould look like:--cmd "log='gdbstub=trace,nrk::arch::gdb=trace'" - Change the log-level of the user-space libOS in vibrio (search for

Level::) - Make sure the Tests run (to see if something broke).

Figuring out why things failed

Maybe you'll encounter failures, for example like this one:

[IRQ] GENERAL PROTECTION FAULT: From Any memory reference and other protection checks.

No error!

Instruction Pointer: 0x534a39

ExceptionArguments { vec = 0xd exception = 0x0 rip = 0x534a39, cs = 0x23 rflags = 0x13206 rsp = 0x5210400928 ss = 0x1b }

Register State:

Some(SaveArea

rax = 0x0 rbx = 0x0 rcx = 0x0 rdx = 0x0

rsi = 0x0 rdi = 0x5210400a50 rbp = 0x5210400958 rsp = 0x5210400928

r8 = 0x2 r9 = 0x5202044c00 r10 = 0x3 r11 = 0x28927a

r12 = 0x520e266810 r13 = 0x7d8ac0 r14 = 0x6aaaf9 r15 = 0x686680

rip = 0x534a39 rflags = FLAGS_RF | FLAGS_IOPL1 | FLAGS_IOPL2 | FLAGS_IOPL3 | FLAGS_IF | FLAGS_PF | FLAGS_A1)

stack[0] = 0x5210400958

stack[1] = 0x53c7fd

stack[2] = 0x0

stack[3] = 0x0

stack[4] = 0x0

stack[5] = 0x0

stack[6] = 0x52104009b8

stack[7] = 0x534829

stack[8] = 0x5210400a50

stack[9] = 0x5210400a50

stack[10] = 0x0

stack[11] = 0x268

The typical workflow to figure out what went wrong:

- Generally, look for the instruction pointer (

ripwhich is0x534a39in our example). - If the instruction pointer (and

rspandrbp) is below kernel base, we were probably in user-space when the failure happened (you can also determine it by looking at cs/ss but it's easier to tell from the other registers). - Determine exactly where the error happened. To do this, we need to find the

right binary which was running. Those are usually located in

target/x86_64-uefi/<release|debug>/esp/<binary>. - Use

addr2line -e <path to binary> <rip>to see where the error happened. - If the failure was in kernel space, make sure you adjust any addresses by

substracting the PIE offset where the kernel binary was executing in the

virtual space. Look for the following line

INFO: Kernel loaded at address: 0x4000bd573000, it's printed by the bootloader early during the boot process. Substract the printed number to get the correct offset in the ELF file. - Sometimes

addr2linedoesn't find anything, it's good to check with objdump, which also gives more context:objdump -S --disassemble --demangle=rustc target/x86_64-uefi/<release|debug>/esp/<binary> | less - The function that gets reported might not be useful (e.g., if things fail in

memcpy). In this case, look for addresses that could be return addresses on the stack dump and check them too (e.g.,0x534829looks suspiciously like a return address). - If all this fails, something went wrong in a bad way, maybe best to go back to printf debugging.

Always find the first occurrence of a failure in the serial log. Because our backtracing code is not very robust, it still quite often triggers cascading failures which are not necessarily relevant.

Debugging rumpkernel/NetBSD components

nrk user-space links with a rather large (NetBSD) code-base. When things go wrong somewhere in there, it's sometimes helpful to temporarily change or get some debug output directly in the C code.

You can edit that code-base directly since it gets checked out and built in the

target directory. For example, to edit the rump_init function, open the file

in the rumpkern folder of the NetBSD source here:

target/x86_64-nrk-none/release/build/rumpkernel-$HASH/out/src-netbsd/sys/rump/librump/rumpkern/rump.c

Make sure to identify the correct $HASH that is used for the build if you find

that there are multiple rumpkernel-* directories in the build dir, otherwise

your changes won't take effect.

After you're done with edits, you can manually invoke the build, and launch the OS again.

As a simple example you can search for rump_init(void) in

target/x86_64-nrk-none/release/build/rumpkernel-$HASH/out and add a printf

statement there, then the following steps should ensure the print also appears

on the console:

cd target/x86_64-nrk-none/release/build/rumpkernel-$HASH/out

./build-rr.sh -j24 nrk -- -F "CFLAGS=-w"

# Delete ../target/x86_64-nrk-none/debug/build/init-* to force rebuild of init...

# Invoke run.py again...

If you change the compiler/rustc version, do a clean build, or delete the target directory your changes might be overridden as the sources exist only inside the build directory (

target). It's a good idea to save changes somewhere for safekeeping if they are important.

Debugging in QEMU/KVM

If the system ends up in a dead-lock, you might be able to get a sense of where things went south by asking qemu. Deadlocks with our kernel design are rare, but in user-space (thanks to locking APIs) it can definitely happen.

The following steps should help:

- Add

--qemu-monitorto the run.py invocation to start the qemu monitor. - Connect to the monitor in a new terminal with

telnet 127.0.0.1 55555. - You can use

info registers -ato get a dump of the current register state for all vCPUs or any other command to query the hypervisor state. - If you're stuck in some loop, getting a couple register dumps might tell you

more than invoking

info registersjust once.

When developing drivers that are emulated in qemu, it can be useful to enable

debug prints for the interface in QEMU to see what state the device is in. For

example, to enable debug output for vmxnet3 in the sources, you can change the

#undef statements in hw/net/vmxnet_debug.h to #define and recompile the

qemu sources (your changes should look similar to this snippet below):

#define VMXNET_DEBUG_CB

#define VMXNET_DEBUG_INTERRUPTS

#define VMXNET_DEBUG_CONFIG

#define VMXNET_DEBUG_RINGS

#define VMXNET_DEBUG_PACKETS

#define VMXNET_DEBUG_SHMEM_ACCESS

Testing

If you've found and fixed a bug, we better write a test for it. nrk uses several test-frameworks and methodologies to ensure everything works as expected:

- Regular unit tests: Those can be executed running

cargo testin the project folder. Sometimes addingRUST_TEST_THREADS=1is necessary due to the structure of the runner/frameworks used. This should be indicated in the individual READMEs. - A slightly more exhaustive variant of unit tests is property based testing. We use proptest to make sure that the implementation of kernel sub-systems corresponds to a reference model implementation.

- Integration tests are found in the kernel, they typically launch a qemu instance and use rexpect to interact with the guest.

- Fuzz testing: TBD.

Running tests

To run the unit tests of the kernel:

cd kernelRUST_BACKTRACE=1 RUST_TEST_THREADS=1 cargo test --bin nrk

To run the integration tests of the kernel:

cd kernelRUST_TEST_THREADS=1 cargo test --test '*'

If you would like to run a specific integration test you can pass it with --:

RUST_TEST_THREADS=1 cargo test --test '*' -- userspace_smoke

If you would like to run a specific set of integration tests, you can specify the file name with --test:

RUST_TEST_THREADS=1 cargo test --test s00_core_tests

In case an integration test fails, adding --nocapture at the end (needs to

come after the --) will make sure that the underlying run.py invocations are

printed to the stdout. This can be helpful to figure out the exact run.py

invocation that a test is doing so you can invoke it yourself manually for

debugging.

Parallel testing for he kernel is not possible at the moment due to reliance on build flags for testing.

The commitable.sh script automatically runs the unit and integration tests:

cd kernel

bash commitable.sh

Writing a unit-test for the kernel

Typically these can just be declared in the code using #[test]. Note that

tests by default will run under the unix platform. A small hack is necessary

to allow tests in the x86_64 to compile and run under unix too: When run on a

x86-64 unix platform, the platform specific code of the kernel in arch/x86_64/

will be included as a module named x86_64_arch whereas normally it would be

arch. This is a double-edged sword: we can now write tests that test the

actual bare-metal code (great), but we can also easily crash the test process by

calling an API that writes an MSR for example (e.g, things that would require

ring 0 priviledge level).

Writing an integration test for the kernel

Integration tests typically spawns a QEMU instance and beforehand compiles the kernel/user-space with a custom set of Cargo feature flags. Then it parses the qemu output to see if it gave the expected output. Part of those custom compile flags will also choose a different main() function than the one you're seeing (which will go off to load and schedule user-space programs for example).

There is two parts to the integration test.

- The host side (that will go off and spawn a qemu instance) for running the

integration tests. It is found in

kernel/tests. - The corresponding main functions in the kernel that gets executed for a

particular example are located at

kernel/src/integration_main.rs

To add a new integration test the following tests may be necessary:

- Modify

kernel/Cargo.tomlto add a feature (under[features]) for the test name. - Optional: Add a new

xmainfunction and test implementation in it tokernel/src/integration_main.rswith the used feature name as an annotation. It may also be possible to re-use an existing xmain function, in that case make not of the feature name used to include it. - Add a runner function to one of the files in

kernel/teststhat builds the kernel with the cargo feature runs it and checks the output.

Integration tests are divided into categories and named accordingly (partially to ensure the tests run in a sensible order):

s00_*: Core kernel functionality like boot-up and fault handlings01_*: Low level kernel services: SSE, memory allocation etc.s02_*: High level kernel services: ACPI, core booting mechanism, NR, VSpace etc.s03_*: High level kernel functionality: Spawn cores, run user-space programss04_*: User-space runtimess05_*: User-space applicationss06_*: Rackscale (distributed) tests

Benchmarks are named as such:

s10_*: User-space applications benchmarkss11_*: Rackscale (distributed) benchmarks

The s11_* benchmarks may be configured with two features:

baseline: Runs NrOS configured similarly to rackscale, for comparisonaffinity-shmem: Runs theivshmem-serverusing shmem with NUMA affinity. This option requires preconfiguring hugetlbfs withsudo hugeadm --create-global-mounts, having a kernel with 2MB huge pages enabled, and then also adding 1024 2MB pages per node, with a command like:echo <page-num> | sudo numactl -m <node-num> tee -a /proc/sys/vm/nr_hugepages_mempolicyThe number of huge pages per node may be verified withnumastat -m.

Network

nrk has support for three network interfaces at the moment: virtio, e1000 and

vmxnet3. virtio and e1000 are available by using the respective rumpkernel

drivers (and it's network stack). vmxnet3 is a standalone implementation that

uses smoltcp for the network stack and is also capable of running in ring 0.

Network Setup

The integration tests that run multiple instances of nrk require

bridged tap interfaces. For those integration tests, the test framework calls

run.py with the --network-only flag which will destroy existing conflicting

tap interfaces and create new tap interface(s) for the test based on the

number of hosts in the test. Then, to run the nrk instances, run.py is invoked

with the --no-network-setup flag.

To setup the network for a single client and server (--workers clients+server), run the following command:

python3 run.py --kfeatures integration-test --cmd "test=network_only" net --workers 2 --network-only

Ping

A simple check is to use ping (on the host) to test the network stack

functionality and latency. Adaptive ping -A, flooding ping -f are good modes

to see that the low-level parts of the stack work and can handle an "infinite"

amount of packets.

Some expected output if it's working:

$ ping 172.31.0.10

64 bytes from 172.31.0.10: icmp_seq=1 ttl=64 time=0.259 ms

64 bytes from 172.31.0.10: icmp_seq=2 ttl=64 time=0.245 ms

64 bytes from 172.31.0.10: icmp_seq=3 ttl=64 time=0.267 ms

64 bytes from 172.31.0.10: icmp_seq=4 ttl=64 time=0.200 ms

For network tests, it's easiest to start a DHCP server for the tap interface so the VM receives an IP by communicating with the server:

# Stop apparmor from blocking a custom dhcp instance

service apparmor stop

# Terminate any (old) existing dhcp instance

sudo killall dhcpd

# Spawn a dhcp server, in the kernel/ directory do:

sudo dhcpd -f -d tap0 --no-pid -cf ./tests/dhcpd.conf

A fully automated CI test that checks the network using ping is available as well, it can be invoked with the following command:

RUST_TEST_THREADS=1 cargo test --test '*' -- s04_userspace_rumprt_net

socat and netcat

socat is a helpful utility on the host to interface with the network, for

example to open a UDP port and print on incoming packets on the command line,

the following command can be used:

socat UDP-LISTEN:8889,fork stdout

Similarly we can use netcat to connect to a port and send a payload:

nc 172.31.0.10 6337

The integration tests s05_redis_smoke and s04_userspace_rumprt_net make use

of those tool to verify that networking is working as expected.

tcpdump

tcpdump is another handy tool to see all packets that are exchanged on a given

interface etc. For debugging nrk network issues, this command is useful as it displays

all packets on tap0:

tcpdump -i tap0 -vvv -XX

Tracing

Use Intel PT (processor-trace). TBD.

Benchmarking

This chapter provides notes and pointers on how to set-up and run applications for benchmarking and run various OS micro-benchmarks.

Microbenchmarks

File-system

The code contains an implementation of the

fxmark

benchmark suite. The benchmark code is located at usr/init/src/fxmark.

To run the fxmark benchmarks invoke the following command:

RUST_TEST_THREADS=1 cargo test --test s10* -- s10_fxmark_bench --nocapture

fxmark supports several different file benchmarks:

- drbh: Read a shared block in a shared file

- drbl: Read a block in a private file.

- dwol: Overwrite a block in a private file.

- dwom: Overwrite a private block in a shared file.

- mwrl: Rename a private file in a private directory.

- mwrm: Move a private file to a shared directory.

- mix: Access/overwrite a random block (with fixed percentages) in a shared file.

By default the integration test might not run all benchmarks, you can modify the CI code to change what benchmarks are run or study it to determine how to supply the correct arguments to

run.py.

Address-space

The following integration tests benchmark the address-space in nrk:

-

s10_vmops_benchmark: This benchmark repeatedly inserts the same frame over and over in the process' address space, while varying the number of cores that do insertions. Every core works in its own partition of the address space. The system measures the throughput (operations per second). -

s10_vmops_latency_benchmark: Same ass10_vmops_benchmark, but measure latency instead of throughput. -

s10_vmops_unmaplat_latency_benchmark: The benchmark maps a frame in the address space, then spawns a series of threads on other cores that access the frame, afterwards it unmaps the frame and measures the latency of the unmap operation (the latency is dominated by completing the TLB shootdown protocol on all cores). -

s10_shootdown_simple: The benchmark measures the overhead in the kernel for programming the APIC and sending IPIs to initiate and complete the shootdown protocol.

The benchmark code is located at usr/init/src/vmops/. To invoke the

benchmarks, run:

RUST_TEST_THREADS=1 cargo test --test s10* -- s10_vmops_benchmark --nocapture

RUST_TEST_THREADS=1 cargo test --test s10* -- s10_vmops_latency_benchmark --nocapture

RUST_TEST_THREADS=1 cargo test --test s10* -- s10_vmops_unmaplat_latency_benchmark --nocapture

RUST_TEST_THREADS=1 cargo test --test s10* -- s10_shootdown_simple --nocapture

Network

TBD.

Benchmarking Redis

Redis is a simple, single-threaded key--value store written in C. It is a useful test to measure single-threaded performance of the system.

Automated integration tests

The easiest way to run redis on nrk, is to invoke the redis integration tests directly:

s05_redis_smokewill spawn nrk with a redis instance, connect to it usingncand issue a few commands to test basic functionality.s10_redis_benchmark_virtioands10_redis_benchmark_e1000will spawn nrk with a redis instance and launch theredis-benchmarkCLI tool on the host for benchmarking. The results obtained by redis-benchmark are parsed and written intoredis_benchmark.csv. Thevirtioande1000suffix indicate which network driver is used.

cd kernel

# Runs both _virtio and _e1000 redis benchmark tests

RUST_TEST_THREADS=1 cargo test --test s10* -- s10_redis_benchmark

Launch redis manually

You can also do the steps that the integration test does manually. We start a

DHCP server first. The apparmor teardown is necessary if you don't have a